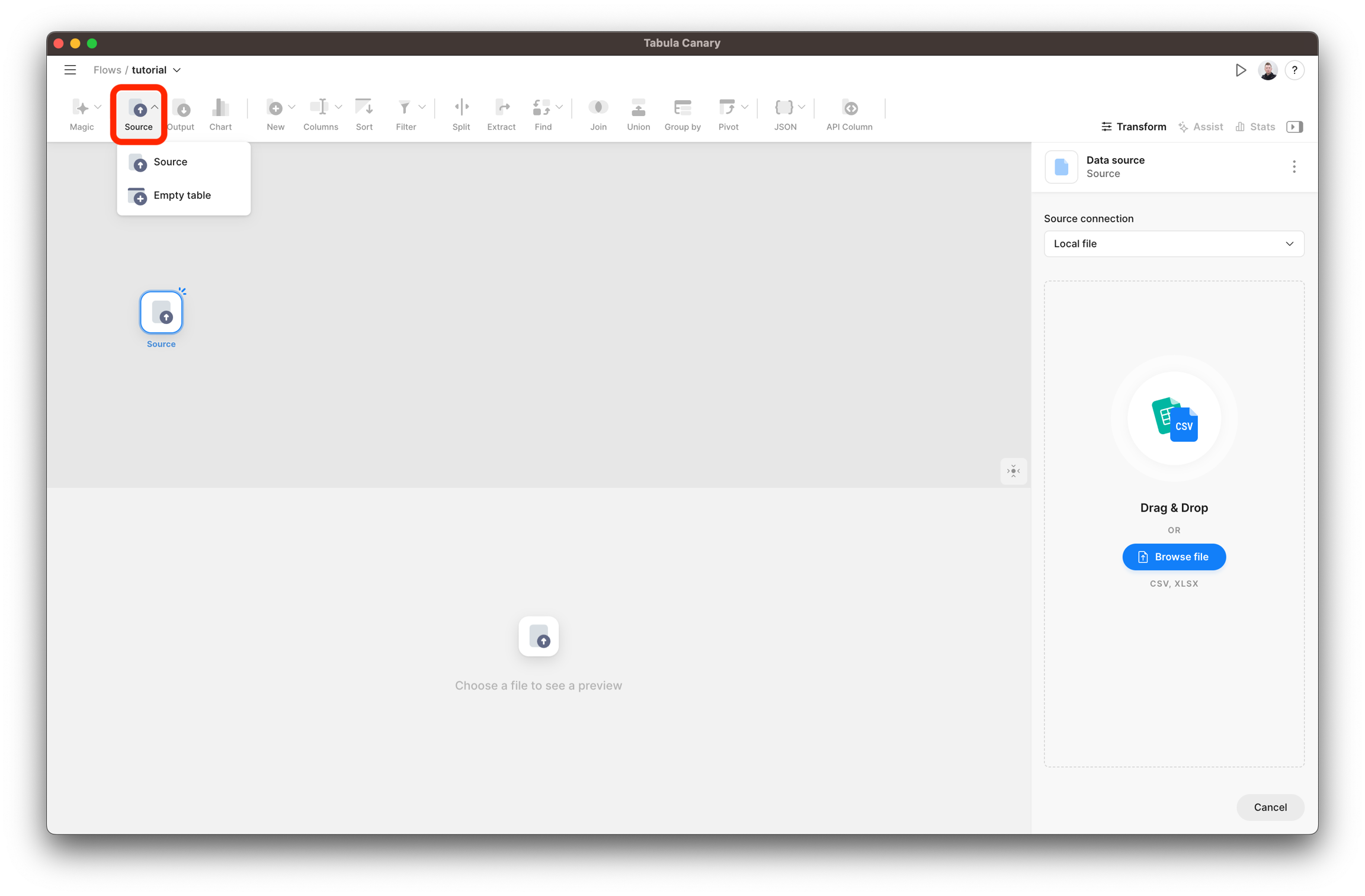

Source

Adds datasets to the flow

Overview

The Source Node is the starting point in your data transformation journey within Tomat. This crucial component allows you to seamlessly connect to various data sources, such as Snowflake and Postgres, or local files, such as CSV and Excel. You can easily import data sets into your workflow by simply dragging and dropping the Source Node onto your canvas.

If you want to connect to cloud apps like Google Ads or Hibspot, please book a demo with our team.

Settings

You can add local files from your computer or connect to databases and warehouses.

Adding the local file

If you want to add a file (CSV or Excel) from a local computer, select Local file and specify the path. When you select a file, you can preview 100 first rows at the bottom of the screen, and in the right panel, you'll have options to customize the upload.

You can connect to a file of any size, as Tomat reads only sample (10,000 rows) data for design mode. During a job running, the whole file will be read.

First row contains headers

If your file contains column headers - enable this option to convert the first line of the table to the headers.

Auto-detect data types

This option automatically checks each column's content and assigns the appropriate type (String, Integer, etc.) to the columns. If you do not select this option, all columns will be imported as Strings.

Advanced settings

Values separated by

This parameter specifies the character used to separate columns in the file. You can choose any of the most popular delimiters, specify your own, or leave the option of automatic detection.

Charset

Allows you to specify which charset is used in the file manually.

Quote symbol

In CSV and Excel files, a quote symbol (usually double quotes) encloses fields containing special characters like commas or line breaks. This ensures that the data within these quotes is treated as a single unit during parsing, maintaining accurate data structure.

Quote escape symbol

A quote escape symbol, usually a backslash (\), is employed in CSV and Excel files to indicate that a quote enclosed within quotes should be treated as part of the data. This prevents misinterpretation of the quote as a delimiter.

Maximum columns count

This determines the highest number of columns allowed in a single row of a spreadsheet. It restricts the data structure by specifying the maximum number of separate data fields present side by side within a single row.

Maximum cell length

This option specifies the maximum number of characters a single cell within a spreadsheet can contain. This setting is crucial for ensuring data integrity and preventing issues from long text entries, which could impact file readability, system performance, or compatibility with other software.

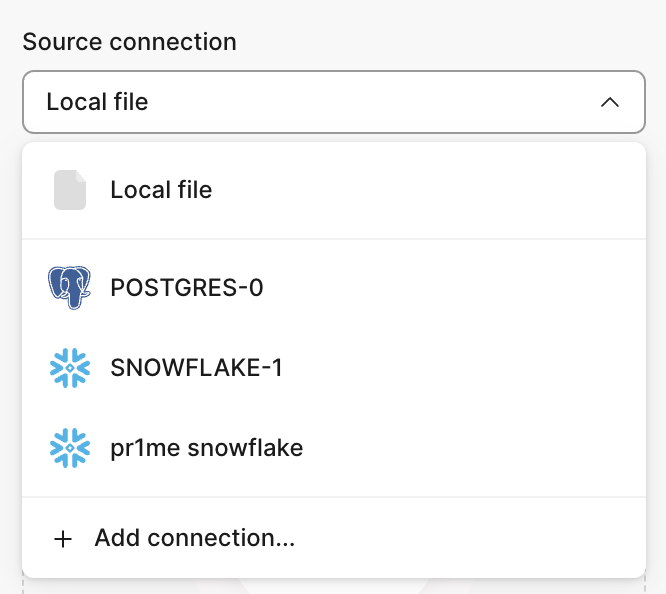

Connect to table or view in cloud warehouse.

If you want to connect to a dataset from a database, first, you need to create a connector - this can be done by selecting the option Add connection from the Source connection list or in the main menu via the Connectors tab.

Database (only for Snowflake)

Select the database you want to connect to.

Schema

Select a scheme from the list.

Tables and Views

Specify which table or view we want to add as a data source.

Columns & Filter rows

Select which columns to add to the source or filter the rows in the original table.

Don't forget to click the Create button to finalize the table import and Source node creation. We only collect a sample (10,000 rows) to increase performance and reduce costs.

Limitations

At the moment all sources in one data flow should use the same connector to external tables. So you cannot use datasets from Postgres and Snowflake in the same flow, but you can combine local files with any type of external connectors.

Last updated